Chapter 3: Scientific Method

Intro

In the previous chapter, we established that all synthetic propositions are fallible. We discussed three main reasons – three problems – that explain why there can be no absolutely certain synthetic propositions. But since, by definition, all empirical theories contain synthetic propositions, no empirical theory can be proven beyond any reasonable doubt. Yet, despite the fact that all empirical theories are fallible, we seem to believe that some empirical theories are better than others. For instance, today we don’t think that Aristotelian physics is as good as that of Newton, just as we don’t think that Newtonian physics itself is as good as our contemporary physics. We don’t teach Aristotelian physics as the best available description of physical processes; if we teach it, we do so out of historical interest, but not as an accepted physical theory. We present general relativity and quantum physics as the best available descriptions of physical processes. A question arises:

If no empirical theory is absolutely true, then why do we think that our current theories are better than the theories of the past?

In other words:

How do we decide which theories should become accepted?

This is the central question of this chapter. But before we proceed to the question itself, we need to clarify what we mean by acceptance.

Acceptance, Use, and Pursuit

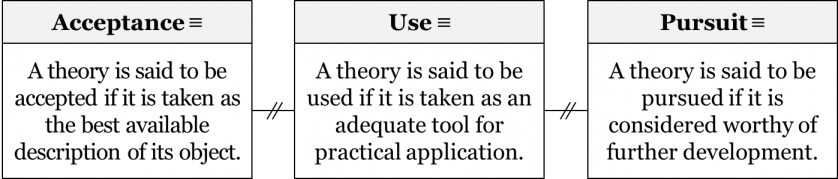

A community can take at least three different epistemic stances towards a theory – acceptance, use, and pursuit. A theory is said to be accepted if it is taken as the best available description of its object. In contrast, a theory is said to be used if it is taken as an adequate tool for practical application. Finally, a theory is said to be pursued if it is considered worthy of further development. Here are the respective definitions:

Let’s take a closer look at each of these stances separately.

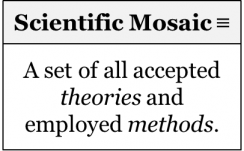

When we say a theory is accepted by a community, what we mean is that the community considers the theory to be the best available description of whatever objects it attempts to describe. Different sciences deal with different types of objects. An object under study can be natural (e.g. physical, chemical, or biological processes), social (e.g. demographic, political, or economic processes), or formal (e.g. mathematical or logical relations). For each of these objects, we can, in principle, have a theory which we take as providing the best available description of that object. It is in this sense that we nowadays accept the standard model of particle physics as the best available classification of subatomic particles. Similarly, we accept the modern evolutionary synthesis as the best available description of the process of biological evolution. By definition, the scientific mosaic of a community consists of all theories accepted by that community.

Importantly, to accept a theory doesn’t necessarily mean to consider it absolutely true. Yes, it is the case that historically there have been many communities that considered the theories they accepted as absolutely true, but this is by no means required. Any fallibilist community, including our contemporary scientific community, only accepts theories as the best on the market, not as infallibly true.

Now, acceptance is not to be confused with use. A theory is considered useful when we find a practical application for the theory. A theory can be used in physical engineering, such as the construction of bridges, spaceships, or tablets. A theory can also be used in social engineering, for instance, to engineer the victory of a certain political party in elections, or to provide a steady income to the owner of a small business. In any case, a used theory may or may not also be accepted by the community as the best available description of its object. It is possible to accept one theory but use another unaccepted theory in practice. The current status of classical physics is the best illustration of this point. While no longer accepted as the best available description of its object, classical physics is still used in a clear majority of technological applications. For instance, when the task is to build a bridge, we will most likely use the equations of classical physics rather than general relativity, because they are much simpler. It is of course possible for the same theory to be both accepted and used, but one does not entail the other. One doesn’t necessarily need to believe that a theory is the best available description of its object to find it useful.

Finally, acceptance and use should not be confused with pursuit. We say that a theory is pursued if we see some promise in its advancement and work on elaborating it. We often pursue ideas which are far from acceptable or useful because we believe that they may one day become so. In science, this happens all the time. For instance, no current physical theory explains all the particles and fundamental forces of nature. Physicists are attempting to do so by elaborating different superstring theories, which explain the fundamental forces and particles as vibrations of strings much smaller than subatomic particles. However, it is understood that none of these pursued theories is currently accepted. Scientists pursue these theories with a hope that they may one day become accepted. Importantly, a pursued theory is not necessarily accepted or useful. The opposite is also true: we can accept a theory as the best available description of its object without committing ourselves to further pursuing that theory.

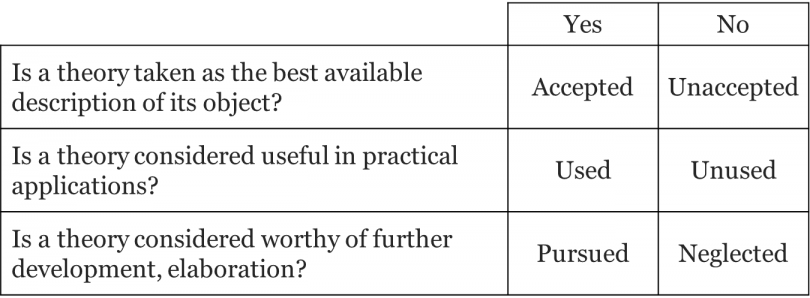

Each of these three stances also has its negation. The opposite of use is disuse or unuse: when we don’t think a theory is of much use in a specific application, we say it’s useless in that respect and as a result it remains unused. The opposite of pursuit is neglect: when nobody works on elaborating a theory, we say it is neglected. The opposite of acceptance is unacceptance: when we don’t think that a theory is the best available description of its object, we say the theory is unaccepted. Importantly, we shouldn’t confuse unacceptance with rejection, for in order to be rejected a theory needs to have been previously accepted in the mosaic. In contrast, a theory can remain unaccepted without ever being accepted in the first place. Take, for example, the M-theory (a version of string theory): it is currently unaccepted, but we cannot say that it was rejected, since it has never been accepted to begin with. Phlogiston theory, on the other hand, a once-accepted chemical theory, was rejected more than two centuries ago and is currently unaccepted. Here are the three stances with their respective negations:

In brief, it is possible to accept one theory, to use another theory in practice and, at the same time, to pursue some other promising theory. Keep in mind that these stances are not mutually exclusive: the same theory can be pursued, used, and accepted at the same time. For instance, it is possible to pursue an already accepted theory by attempting to apply it to previously unexplained phenomena. Thus, Newton’s theory of universal gravitation was already accepted when astronomers continued pursuing it and successfully predicted the existence of Uranus from anomalies in the orbits of other planets. It is also possible to use and pursue the same theory without accepting it, as well as use and accept the theory without pursuing it. Any combination of these three stances is possible.

While it is very interesting to trace the transitions in both used and pursued theories, the question of how and why scientists come to accept their theories seems the most intriguing. This is partially due to the fact that when we acquire a new useful theory, we often also continue using our previous theories; it is possible to use a number of incompatible theories even within the same practical application. For instance, one can use both Ptolemaic and Copernican astronomy to calculate the positions of different planets for a given time. The same goes for pursuit: scientists usually pursue many different competing theories at the same time. Any field of science is full of examples of this phenomenon. Acceptance, however, is different; as we only accept the best available theories. Two incompatible theories can be simultaneously used, pursued, but not accepted; only one of the competing descriptions can be accepted at a time. For instance, it is impossible to believe that the Earth is both flat and spherical at the same time. Importantly, only accepted theories constitute the scientific mosaic of the community. Thus, the central question of this chapter is how scientists decide which theories are the best available descriptions of the world, i.e. which theories are to be accepted.

Method

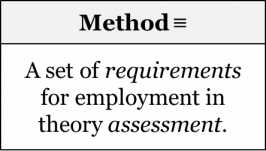

So how do we decide whether a theory is acceptable or not? How can we tell that it is the best among the plethora of competing theories? To answer this question, we have to see how scientific communities evaluate theories.

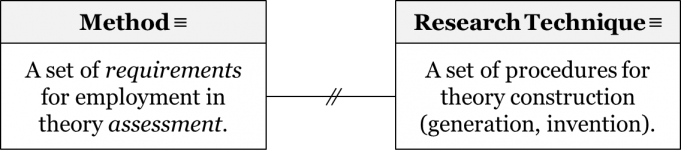

Suppose we take a couple of competing empirical theories that attempt to describe the same object. Suppose that one of these theories is currently accepted as the best description of its object and that the other theory is viewed as a contender theory that could potentially replace the accepted theory as the best available description. Now, what would it take for the contender theory to become accepted and replace the previously accepted theory in the mosaic? Because we are dealing with empirical theories, chances are we are going to need some evidence, i.e. some results of observations and experiments to help us decide if the contender theory is indeed better than the theory we currently accept. Yet, evidence alone won’t suffice. What we will also need is some set of rules for theory assessment, some criteria that a new theory should satisfy in order to become accepted. In other words, we will need some method of theory evaluation. Method is defined as a set of requirements (criteria, rules, standards, etc.) for employment in theory assessment (evaluation, appraisal, comparison, etc.):

Here are some examples of methods:

A theory is acceptable if it is simpler than its competitors.

A theory is acceptable if it solves more problems than its competitors.

A theory is acceptable if, given the available evidence, it is the most probable among the competitors.

A theory is acceptable if it explains everything explained by the previously accepted theory and also has confirmed novel predictions.

Keep in mind that these are just examples of what sorts of criteria there might be; at this stage we haven’t considered which methods scientists actually employ when evaluating theories. Before we proceed to explicating the criteria scientists actually employ in theory evaluation, we need to make two important clarifications.

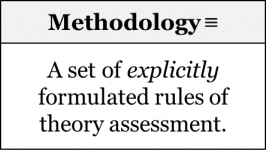

First, it is important not to confuse methods with methodologies. We define methodology as a set of openly prescribed rules of theory assessment:

Most communities have some idea as to exactly what they think an acceptable theory should look like. Many communities openly state their requirements concerning new theories in their field. This is what we call methodology; these methodologies are usually explicitly stated in textbooks, encyclopedias, and research guidelines. But it must be obvious that the rules openly prescribed by a community may or may not coincide with the rules actually employed by that community in theory assessment.

To appreciate the difference between the two, let’s look at a simple example: how do we choose our favourite books? We clearly like some books better than others. This shows that we have some expectations as to what a great book should be like. In other words, we have an implicit method for book evaluation. Now, let us try to explicate our expectations; let’s try to write down what a decent book should be like. These openly stated criteria would be our methodology of book evaluation. It is quite possible that prior to this exercise we didn’t even have any openly stated methodology for evaluating books. It is also possible that our openly stated requirements differ from our actual expectations. After all, explicating why we choose what we choose is not an easy task – be that for books, foods, or life-partners. Yet, that doesn’t seem to stop us from ranking some books higher than others. What this tells us is that implicit expectations (methods) are there regardless of whether we have any idea what those expectations are, or whether we have ever attempted to state those expectations explicitly.

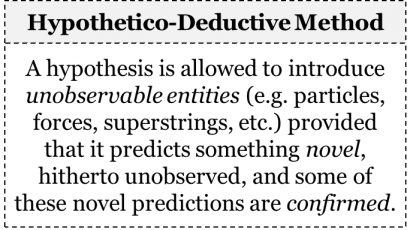

This is similar to what takes place in science. As Steven Weinberg has pointed out, “most scientists have very little idea of what scientific method is, just as most bicyclists have very little idea of how bicycles stay erect”. Thus, if we are to learn how scientists choose their theories, we have to follow Albert Einstein’s advice and look at what scientists do (i.e., their method) rather than what they say they do (i.e. their methodology). This is confirmed by the history of science, which provides many examples when the rules of the methodology openly stipulated by a community were very different from the actual expectations of that community, i.e. from the method they employ to evaluate theories. For instance, the empiricist-inductivist methodology of the late 18th century prescribed that a theory should merely generalize the results of experiments and observations without postulating any unobservable entities. Yet, it is a historical fact that virtually all of the accepted theories of the time depended crucially on the postulation of unobservable entities. For instance, the fluid theory of electricity posited that electrical phenomena are due to the presence or absence of an electric fluid, which repels itself but attracts matter. Similarly, the theory of preformation postulated that men’s semen contains homunculi, fully formed tiny people who grow in size into human beings after being deposited into the womb. Finally, Newton’s theory itself assumed the existence of such unobservables as absolute space, absolute time, and the force of gravity. This suggests that the actual expectations of the community of the time differed considerably from the methodological dicta explicitly stated in their textbooks and encyclopedias.

Thus, method and methodology shouldn’t be confused. Keeping in mind the difference between the two is especially important when reconstructing the state of the mosaic of a certain community at a certain time. To locate the methodologies of the community, we usually look into their textbooks, encyclopedias, and research guidelines. Methods, on the other hand, are much more elusive, as they are normally not on the surface, but are extracted by historians who analyse a series of transitions in a mosaic and attempt to explicate the actual expectations of the community. Now, when reconstructing the state of a given mosaic, it is important to be able to identify the methodologies that were prescribed by the community. Yet, it is even more important to be able to extract the actual expectations of the community, i.e. their methods of theory evaluation. Indeed, it is methods, not methodologies that do the actual job of theory evaluation. In order to convince a community that a certain theory is acceptable, we must make sure that the theory meets their actual expectations, regardless of whether it meets their openly proclaimed methodological rules.

Second, it is important not to confuse methods with research techniques. In scientific practice, method has two related but distinct meanings. Yes, method is often used to denote the rules of theory evaluation, just the way we use it in this textbook. But method is also often used to denote different research techniques, i.e. a set of procedures for generating ideas, constructing theories, or performing experiments, as in a Materials and Methods section of a typical scientific paper. The two are by no means the same and we will keep them separate:

It is one thing to ask how we generate (construct or invent) our theories; it’s quite another thing to ask how we evaluate (assess or appraise) them. Suppose we are trying to come up with an answer to an open question and we decide to sit down with our colleagues and brainstorm to generate some possible answers. Brainstorming is an example of a research technique, as it aims to generate as many interesting ideas as possible. Importantly, the outcome of a brainstorming session may or may not be acceptable. More likely, some of the outcomes of a brainstorming session will be deemed worthy of pursuit by an individual or a research group, with much further work needed before community acceptance becomes an issue. Whether a theory that results from this becomes accepted or not will be decided by the respective method of theory evaluation.

Separating methods from research techniques may sometimes be challenging even for professionals. There is a useful rule of thumb for distinguishing between the two. Research techniques normally contain certain steps or activities that are believed to be conducive to research. Here is a typical example of a research technique:

Step 1: Pose a question.

Step 2: Write down ideas.

Step 3: Discuss.

This research techniques tells us what to do and in what order. Methods, on the other hand, don’t tell us what to do, but always tell us which conditions a theory should satisfy in order to be acceptable. That’s why methods typically begin with “a theory is acceptable if…”. Here is a typical example:

A theory about a drug’s efficacy is acceptable if the drug’s effect has been shown in a randomized controlled trial.

So, if we want to check whether something is a method or a research technique, the general rule is: if it can be formulated as “a theory is acceptable if…”, then it is a method; otherwise it is a research technique. As a quick exercise, consider the following example:

Step 1: Ask a question.

Step 2: Conduct background research.

Step 3: Propose a hypothesis.

Step 4: Design and perform an experiment.

Step 5: Record observations and analyse data.

Step 6: Evaluate the hypothesis.

In most literature, this six-step process is called “the scientific method”. But does this really sound like a method as we have defined it here? To use our rule of thumb, this formulation does not tell us how a theory is to be evaluated, but rather, outlines a set of research steps. In other words, it tells us how to proceed with our scientific research, but it doesn’t tell us exactly how the fruits of this research are to be evaluated. So, we do not call this a method, we call it a research technique.

Now, which of the two, methods or research techniques, are we going to focus on? While it might be interesting to inquire into the ways scientists of a certain period generated their ideas, for the purposes of our discussion, what matters is how these ideas were evaluated by the community and whether they were accepted as a result of that evaluation. This reflects the general attitude of scientists who don’t really care about the particular circumstances of a theory’s creation; what they care about is whether the theory actually holds water, i.e. whether it is the best available description of its object. Thus, it makes little difference where exactly a certain theory comes from: I might have seen it in my dreams; I might have used a high-end heuristic technique to generate it; I might have had contact with aliens who told me all about it; I might have even stolen it from a colleague. The provenance of a theory might be an interesting topic when studying the intellectual biography of the theory’s author. Alternatively, the provenance might become relevant when it plays some role in the criteria of the method employed at the time. If, for instance, it turns out that, other things being equal, a community gives preference to theories created by the members of the same nationality, ethnicity, religion, etc., then the provenance of theories becomes part of the method of that community. In any event, what matters is the criteria which are employed to decide whether a theory is acceptable or not. Thus, we will be focusing not on research techniques, but on methods of theory evaluation.

Explicating the Method of Science

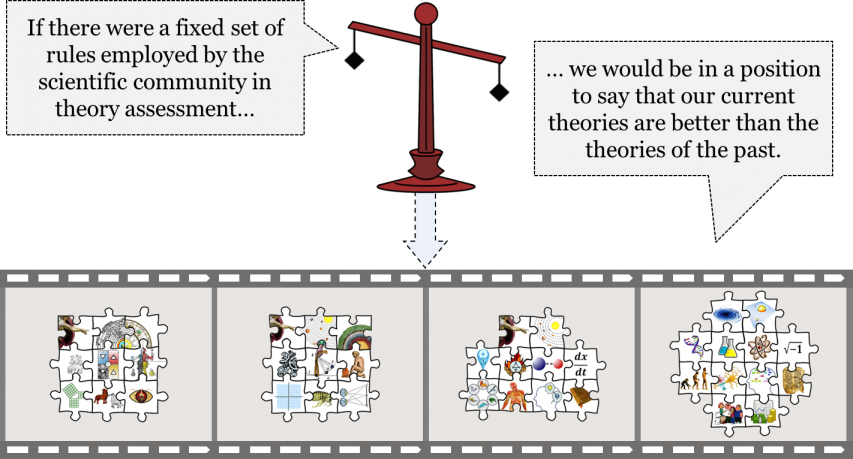

Now that we’ve established that theories are evaluated by a method, we can consider what those expectations, rules, or criteria actually are. That is, what specific criteria should a theory satisfy in order to become accepted? If there were a fixed set of criteria that scientists of all times and places employed in evaluating their theories, then we would have a good argument that transitions from one accepted theory to the next are not random, but rational. If we could only show that there is an unchangeable method of theory evaluation, then all changes in any mosaic would be governed by this fixed, transhistorical method of science. In that hypothetical scenario, the process of scientific change would look like this:

Naturally, this cosy picture would only make sense if there indeed were a fixed scientific method. But is there such a thing? In other words:

Is there an unchangeable (fixed, transhistorical) method of science?

For most of the history of knowledge, it was generally assumed that while scientific theories change through time, the criteria that scientists employ when assessing competing theories remain somehow fixed. Since the days of Aristotle, if not earlier, philosophers have attempted to openly formulate the requirements of this fixed method of science. Over the centuries, there have been a great many attempts to unearth this elusive scientific method. These attempts can be grouped into several traditions with very diverse formulations of the scientific method. Here are very brief summaries of some of the most notable attempts:

Inductivist-empiricist: a theory is acceptable if it is based inductively on experience.

Conventionalist-simplicist: a theory is acceptable if it explains the greatest number of phenomena in the simplest way.

Pragmatist: a theory is acceptable if it solves the greatest number of problems.

Hypothetico-deductivist: a theory is acceptable if its predictions are confirmed in experiments and observations.

Note that this brief outline doesn’t even scratch the surface of the infinitely rich world of discussions on scientific method. It’s merely meant as a general sketch.

Now, let us assume for the sake of argument that there is such a thing as a fixed (unchangeable, transhistorical) method of science. How would we spell it out? What are our actual expectations concerning new scientific theories and how can we explicate them?

The best way to explicate our expectations is by studying the transitions in our mosaic over a certain period of time. When focusing on changes in theories that have taken place since the early 1700s, two kinds of communal expectations seem to transpire. While in some cases we seem to expect a new theory to have made successful predictions of novel (hitherto unobserved) phenomena, in other cases, we seem to be perfectly happy to accept a theory even if it doesn’t make any novel predictions that have been confirmed in experiments and observations.

Historically, there have been many cases where a theory has been accepted only after some of its predictions of hitherto unobserved phenomena were confirmed. For instance, the French community of natural philosophers accepted Newtonian physics ca. 1740 only after the confirmation of one of its novel predictions – the prediction that the Earth is slightly flattened towards the poles, i.e. that it is an oblate spheroid. The theory’s prediction that the Earth is an oblate spheroid was at odds with the then-accepted view of the Earth as a prolate spheroid, i.e. as a spheroid that is slightly elongated towards the poles. The prediction of the oblate-spheroid Earth was confirmed by several expeditions organized by the French academy of sciences in the late 1730s. Importantly, Newton’s theory became accepted in France only after these confirmations.

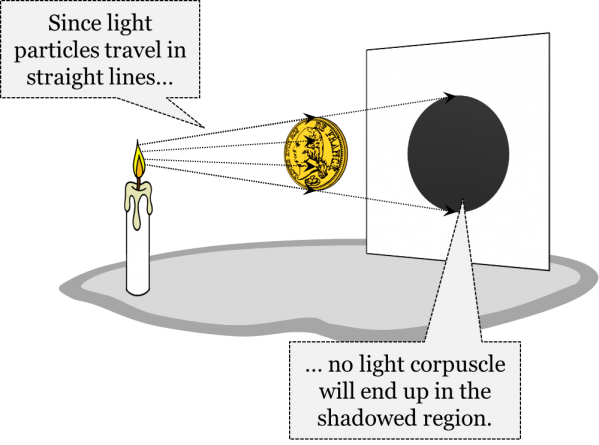

Similarly, Fresnel’s wave theory of light became accepted ca. 1820, right after the confirmation of one of its novel predictions – the prediction of a bright spot at the centre of the shadow of a circular disc. According to the previously accepted corpuscular theory of light, light is a chain of tiny particles that travel along straight lines. Like any other particles, light particles were expected to travel along straight lines unless they were affected by an obstacle. In particular, it followed from the corpuscular theory of light that the shadow of a circular disc must be uniformly dark.

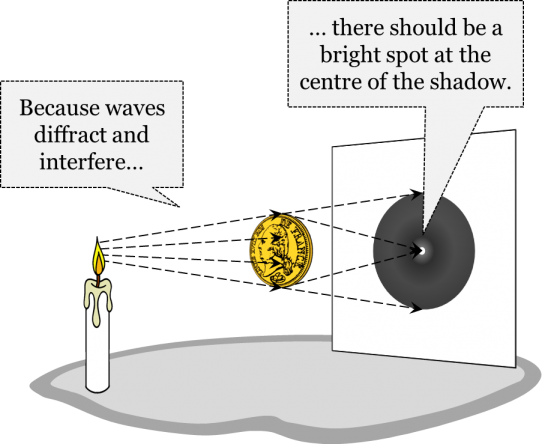

The picture suggested by Fresnel’s wave theory of light was very different. According to the wave theory, light is a wave that spreads in a universally present medium called ether similar to the propagation of water waves as a result of a dropped stone. This theory also suggested that, just like water waves, light waves should be able to diffract, bend behind obstacles, and interfere with one another. It is because of this ability of light waves to diffract and interfere that the theory made the prediction that there must be a bright spot at the centre of the shadow of a circular disc – a surprising novel prediction that had never been observed before.

The prediction was confirmed in 1819, and the wave theory of light became accepted.

The history of science is full of examples where a theory became accepted only after some of its novel predictions became confirmed by experiments and observations. Other examples of this phenomenon include the acceptance of Einstein’s general relativity ca. 1920, the theory of continental drift in the late 1960s, the theory of electroweak unification by Weinberg, Salam, and Glashow in the mid-1970s, as well as the acceptance of the existence of the Higgs boson in 2014.

Yet, a careful study of the history of science reveals many other transitions where a theory became accepted without having any confirmed novel predictions whatsoever. There have been many cases where all that the community seemed to care about was whether the theory under evaluation managed to explain the extant observational and experimental data with the desired level of accuracy and precision.

The acceptance of Mayer’s lunar theory in the 1760s provides a nice example of this. It is safe to say that predicting the trajectory of the moon with the same accuracy and precision as the trajectories of other celestial bodies had been a challenging task ever since the inception of astronomy. As a result, a great number of lunar theories had been pursued over the years. The 18th century alone gave birth to several prominent lunar theories, including those of Isaac Newton, Leonhard Euler, Alexis Clairaut, Jean d’Alembert, and Tobias Mayer. When Mayer’s theory became accepted, it was precisely because it was found to be accurate enough to determine the moon’s position to five seconds of arc. Importantly, the theory became accepted without having any confirmed novel predictions.

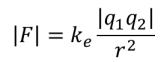

The acceptance of Coulomb’s inverse square law of electrostatics a few decades later exhibits the same pattern. Charles-Augustin de Coulomb formulated his famous law of electrostatic force in 1785. The law states:

The force of attraction/repulsion between two point charges is proportional to the product of the magnitude of each charge and inversely proportional to the square of the distance between them:

Here is the mathematical formulation of the law:

According to the law, opposite charges attract, while like charges repel each other. The force of this attraction/repulsion (F) is greater when the charges (q1 and q2) are greater and smaller when the distance (r) is greater. Notably, Coulomb’s law became accepted in the early 1800s because it fit the available data. The community of the time didn’t seem to care about the fact that the theory didn’t provide any confirmed predictions of novel, hitherto unobserved phenomena.

There have been many cases in the history of science when a theory became accepted in the absence of any confirmed novel predictions, i.e. cases where the mere precision and accuracy of predictions was sufficient. Other prominent examples of this phenomenon are the acceptance of the three laws of phenomenological thermodynamics in the 1850s, Clark’s law of diminishing returns in economics ca. 1900, and quantum mechanics towards 1930. Now, what does this tell us about our expectations? Specifically, do we or do we not require a theory to have confirmed novel predictions to consider it acceptable?

Several generations of philosophers have debated over the role of novel predictions in theory acceptance. Among many others, William Whewell, Karl Popper, and Imre Lakatos all argued that confirmed novel predictions play an indispensable role in theory evaluation. Among their opponents, who argued that confirmed novel predictions don’t play any special role in theory evaluation, were John Stuart Mill, Milton Keynes, and Larry Laudan.

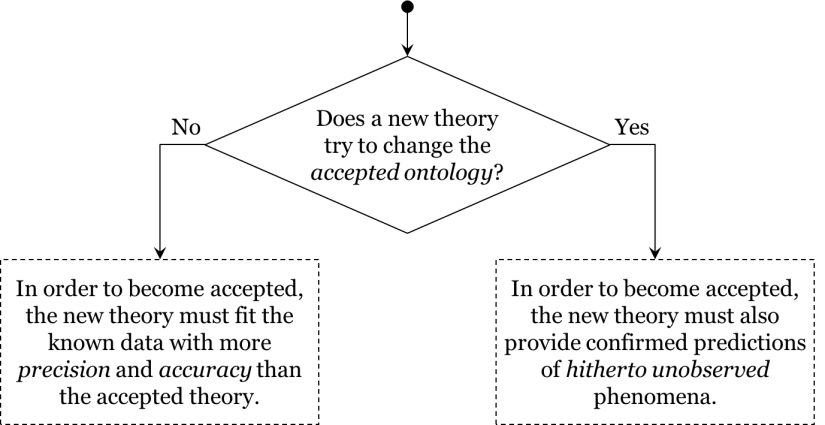

A careful examination of historical episodes evinces that scientists do expect confirmed novel predictions, but only in very special circumstances. We expect a theory to be making successful predictions of hitherto unobserved phenomena only when the theory attempts to change our accepted ontology. Now, what is an ontology? Roughly speaking, an ontology is a set of views on the types of entities and interactions that populate the world. For instance, our accepted ontology today includes our belief that there are quarks, leptons, and bosons, that there are more than one hundred chemical elements, that there are millions of species, etc. While most empirical theories presuppose an ontology, not every empirical theory requires the replacement of the previously accepted ontology. Very often, a new theory doesn’t introduce new entities or new relations, i.e. it doesn’t offer an alternative ontology, but shares the ontology of the previously accepted theory. At times, however, a new theory comes with a different ontology – with different assumptions on what entities and relations populate the world. Importantly, our expectations towards new theories depend crucially on whether a theory attempts to introduce a modification to our accepted ontology.

We seem to expect confirmed novel predictions whenever a theory attempts to alter our accepted ontology, i.e. if it tries to convince us to accept the existence of a new entity, new interaction, new process, new particle, new wave, new force, new substance, etc. For instance, Newtonian physics posited the existence of absolute space, absolute time, and the force of gravity acting at a distance – all of which were not part of the then-accepted ontology; thus, it was expected to have some confirmed novel predictions. Similarly, Fresnel’s wave theory of light attempted to introduce the idea of light-waves into our ontology; it was also expected to make successful predictions of novel phenomena. The same goes for Einstein’s general relativity, which postulated the existence of a curved space-time continuum; such a bold hypothesis could only be accepted if at least some of the novel predictions of the theory were experimentally or observationally confirmed. Note that the confirmation itself need not necessarily be done in a single crucial experiment or observation but may rather take a series of experiments and observations that collectively confirm the prediction. For example, in the nineteenth century the Schleiden-Schwann cell theory posited an ontological novelty – the idea that living cells are the fundamental unit of structure in all organisms. Thus, the theory was expected to be experimentally confirmed. However, it was accepted because cellular structure was detected under the microscope in a growing catalogue of living species, rather than as a result of one single decisive observation.

By contrast, when it comes to theories that do not attempt to change our accepted ontology, we seem to be much more lenient. If a theory doesn’t try to alter our views on the constituents of the world, we normally do not require any confirmed novel predictions – we are typically willing to deem the theory acceptable if it provides a more accurate and precise description of already known phenomena. Consider, for instance, Mayer’s lunar theory. It wasn’t expected to provide any confirmed novel predictions because it didn’t try to alter our accepted ontology. It was an attempt to explain and predict the trajectory of the moon by relying exclusively on the elements of the accepted Newtonian ontology, such as mass, force, acceleration, distance, etc. Consequently, the community of the time merely expected the theory to be more accurate and precise in its predictions of lunar motion than the previously accepted lunar theory. Likewise, Coulomb’s law wasn’t attempting to modify the then-accepted ontology of point charges and electric forces. It was merely an attempt to quantify the already known relationship (attraction/repulsion) between known entities (point charges). Naturally, the community didn’t require the theory to predict any previously unseen phenomena. The same goes for any other theory that merely provides a new description of a phenomenon by means of known relations and entities.

To summarize our expectations, if a theory fully relies on the currently accepted ontology, it is not expected to provide any confirmed novel predictions – mere accuracy and precision of its predictions suffice; otherwise, if a theory tries to convince us that there exists some new type of particle, substance, interaction, process, force, etc., it must provide confirmed novel predictions. As Carl Sagan once said: “extraordinary claims require extraordinary evidence.” After all, if a theory posits the existence of superstrings, the only way to convince the community is by confirming some of the novel predictions that follow from the theory. Here is the summary of our expectations in a flow-chart:

This is essentially the gist of the hypothetico-deductive method that we seem to employ nowadays:

Since this is an explication of the implicit expectations of scientific communities, we have to keep in mind that it may or may not be correct. It is our historical hypothesis that the requirements of the hypothetico-deductive method have actually been employed in physical sciences since the early 1700s.

Fixed Method?

Let us assume for the sake of argument that this historical hypothesis is correct. A question arises: is this the fixed method of science? Is it the case that in all historical periods and all fields of inquiry, new theories have always had to satisfy the requirements of this method in order to become accepted? More generally, can we claim that our expectations towards new theories haven’t changed ever since antiquity? In short:

Is there an unchangeable (fixed, transhistorical) method of science?

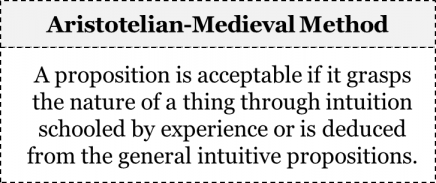

An analysis of historical episodes reveals that the hypothetico-deductive method has not always been employed in theory evaluation. At best, we can show that the method has been employed in most physical sciences since around 1700. If we went back to the times of Aristotelian-Medieval science, we would notice that the expectations of that community had little in common with the requirements of the hypothetico-deductive method. So, what were their expectations?

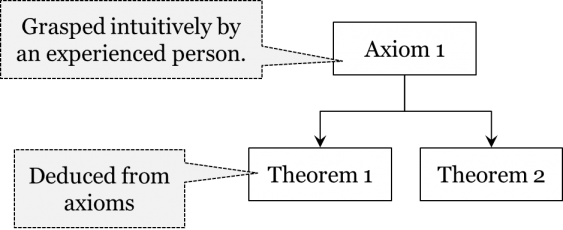

While explicating the exact expectations of a community is never an easy task, we can reasonably claim that in the Aristotelian-Medieval worldview, a theory/proposition was acceptable if it grasped the nature of a thing through intuition schooled by experience, or if it followed deductively from propositions that seemed intuitively true:

Now, how can someone’s intuition be “schooled by experience”? The underlying idea here is straightforward: the more time one spends studying a certain phenomenon, the better one is positioned to reveal the nature of that phenomenon. A beekeeper that has spent a lifetime around bees is assumed to be in a position to tell us what the nature of bees is. Similarly, an experienced arborist will have developed an intuition regarding the nature of different trees – an intuition that has been schooled by her experience. Finally, a natural philosopher who has carefully considered different types of things is best positioned to know what these things have in common; e.g. she can grasp intuitively that everything is made out of four elements (earth, water, air, fire) and that these elements tend towards their natural positions – heavy elements tend towards the centre of the universe and light elements tend towards the periphery. In Aristotelian-Medieval science, these expectations applied to any field of study: a theory would be acceptable if it appeared intuitively true to those schooled by experience, i.e. experts.

In the Aristotelian-medieval worldview, the basic intuitions about the nature of things would be considered the axioms of their science. Once the nature of a thing under study was grasped intuitively by an expert, we would then proceed to tracing the logical consequences (i.e. theorems) of our basic intuitions. For example, once it is grasped that heavy elements tend towards the centre of the universe, we can deduce a theorem that the Earth, which is predominantly a combination of the elements earth and water, is located at the centre of the universe. As a result, we have an axiomatic-deductive system, where the axioms are grasped intuitively by experts and the theorems are logically deduced from these axioms.

Importantly, the method of the Aristotelian-medieval community had nothing to do with confirmed novel predictions; the community expected basic intuitions schooled by experience and deductions from these intuitions. Thus, trying to convince that community by an appeal to confirmed novel predictions would be a fool’s errand.

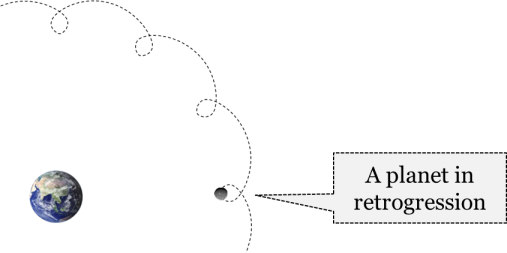

The famous case of Galileo is a great illustration of the Aristotelian-medieval method in action. If we went back to the year 1600, we would discover that the accepted view was that of Ptolemaic geocentrism: the Earth was believed to be at the centre of the universe and all planets, the Moon, and the Sun were believed to revolve around the Earth. From the Earth, the observable motion of planets can seem rather strange against the relatively uniform motion of the stars. While the stars seem to always travel in the same direction and at the same rate, a dedicated observer will notice that planets seem to travel at different speeds, and sometimes move in reverse – what has been called retrograde motion.

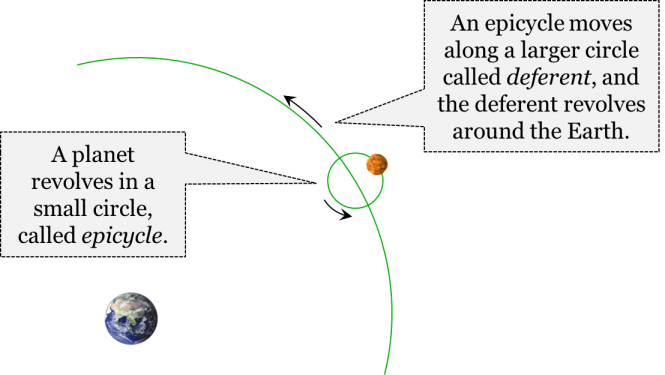

This meandering across the sky is actually what earned them the name planet: recall that the Greek word planētēs means wanderer. For Ptolemy, all heavenly bodies rotated around the Earth in circular paths that were called deferents. To account for the planets’ easily observable retrograde motion, Ptolemy suggested that planets moved in an additional, smaller circular orbit along their deferent, called the epicycle. Such combinations of epicycles and deferents helped reproduce the exact motions of every planet.

In fact, the theory was extremely successful in its predictions of future positions of planets.

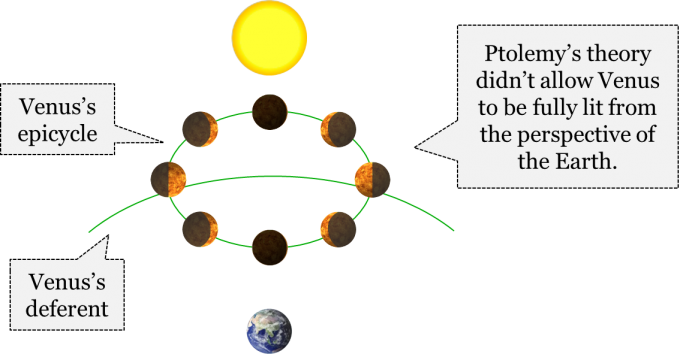

Since, in this theory, Venus revolves around the Earth, it was believed that an observer on Earth could never see Venus fully lit; we could, at best, see it half-lit:

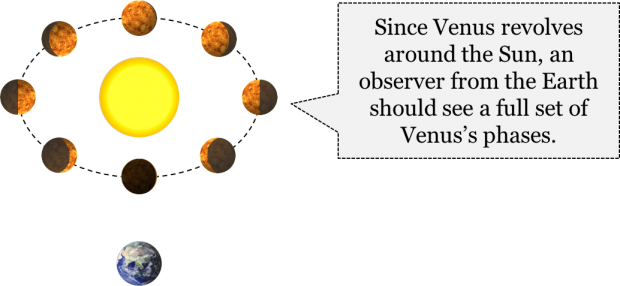

A few alternative cosmological theories were pursued at the time, including the heliocentric theory of Copernicus, championed famously by Galileo. In the Copernican heliocentric theory, Venus and the other planets all revolve around the Sun and, thus, an observer on Earth should be able to see a full set of Venus’s phases:

This was one of the novel predictions of the Copernican theory. It was not until the invention of the telescope that it became possible to test it. In 1610, Galileo famously tested this prediction and confirmed that we can see a full set of phases of Venus. If only the community of the time cared about confirmed novel predictions, then the Copernican theory would’ve become accepted. Unfortunately for Galileo, the community of the time didn’t care for confirmed novel predictions, as they expected new theories to be commonsensical, i.e. to appear intuitively true to experts. But the idea of the Earth being anywhere but at the centre of the universe was anything but commonsensical. At the time, the idea of the Earth’s revolution around the Sun and its diurnal rotation around its own axis appeared equally counterintuitive. Not only was it against our everyday observations that seemed to suggest that the Earth is static, but it also contradicted the laws of the then-accepted Aristotelian natural philosophy which entailed that because the Earth is made of the elements earth and water it could not possibly revolve or rotate. It is not surprising, therefore, that Galileo failed to convince the community of his time. Importantly, his failure had nothing to do with the alleged dogmatism and obstinacy of the university-educated Aristotelian clergy of the time, but with the fact that the expectations of the community had nothing to do with observational confirmations of novel predictions. It is as though Galileo was trying to beat everyone in chess when others were playing checkers.

It took the Copernican theory almost another century to become accepted. But how did it become accepted? The short answer is that it was incorporated into a more general system of the world which finally managed to meet the expectations of the community. The author of that system was René Descartes, whose views we will revisit on several occasions, specifically in chapter 8. Descartes devised a system of the world with the goal of meeting the requirements of the Aristotelian-Medieval community. The most comprehensive exposition of this new system was presented in his Principia Philosophiae (Principles of Philosophy), first published in 1644. Descartes clearly realized that if his theory was going to succeed it had to strike the experts as intuitively true. But, says Descartes, if it is intuitive truth we are after, wouldn’t it be more reasonable to start by erasing everything we think we know and then proceed to accepting only those theories that are established beyond any reasonable doubt? That’s exactly what Descartes set off to accomplish.

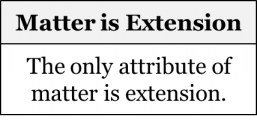

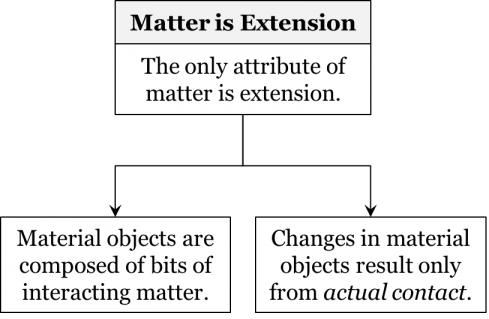

Among many other topics, Descartes also attempted to reveal the attributes of matter, i.e. those qualities of material objects that are indispensable. Take any material object: a rock, a plant, an animal, a human body – anything. Question: what are the qualities that any material object must necessarily have? A material object can have some colour, sound, taste, smell, and shape. Of these usual suspects, which are truly indispensable? For instance, can we think of a material thing that doesn’t have any colour? Yes, says Descartes, it is possible: we can think of something material yet transparent, such as air. Therefore, colour is not an indispensable property of material objects. But how about sound: can we conceive of a material object that doesn’t make any sound? Clearly, we can do that. Therefore, according to Descartes, sound is not indispensable. The same goes for taste: there are many objects that don’t have any taste. Moreover, there are some foods that are supposed to have taste but don’t seem to have any (I am looking at you, American apricots)! Thus, taste is not an indispensable quality of matter. What about smell? Clearly, not everything smells in our world, so smell is yet another dispensable quality. This only leaves us with shape. Descartes argues that we cannot possibly conceive of a material object that does not occupy some space. A material thing can have a well-defined shape, as is the case for most of the solid objects around us, or it could have a pretty fuzzy shape, as is the case with water, fire, or air. But in any case, all material objects necessarily occupy some space, i.e. they are all spatially extended. Indeed, can we conceive of a material object that doesn’t occupy any space whatsoever, even a very minute space? That’s impossible, says Descartes; if you happen to come across a material object that doesn’t occupy any space whatsoever, then it’s not much of a material object, is it? Recall that infamous cheese shop from the Monty Python sketch that didn’t have any cheese: it wasn’t much of a cheese shop, was it? Similarly, for a thing to be material, according to Descartes, it has to be spatially extended, i.e. it must occupy some space. This brings Descartes to the formulation of one of his fundamental principles – the idea that the only attribute of matter is extension:

Once again, by attribute, Descartes means an indispensable quality.

Once we accept this principle, a number of notable conclusions logically follow. One such conclusion is the idea that material objects should be composed of bits of interacting matter, each spatially extended. Indeed, says Descartes, if all material objects can do is occupy some space, then everything in the universe is composed of smaller bits of matter that also occupy some space and interact with each other. But how can two bits of matter possibly affect each other if all they can do is occupy space? Consider two billiard balls. How can one billiard ball possibly affect another billiard ball? Their actual contact seems to be the only way in which they can interact. According to Descartes, that’s exactly the case with all interactions between material objects; because they all are spatially extended bits, they can only affect each other by touching and pushing. That requires actual contact; for Descartes, there can be no such thing as action at a distance.

While these conclusions may sound trivial, they are the cornerstones of the mechanistic Cartesian worldview that we will study in chapter 8.

Importantly, Descartes wanted to persuade his Aristotelian peers that these conclusions are nothing but intuitive truths, just as Aristotle himself would have wanted. Isn’t it intuitive, says Descartes, that the only attribute of matter is extension? It is common sense! So, if we are expecting commonsensical, intuitively true axioms, we have them. In essence, Descartes was claiming that his theory satisfies the Aristotelian-Medieval requirements better than Aristotle’s theory itself!

The difference between Descartes’ strategy and that of Galileo is apparent. Where Galileo was trying to convince the community by quoting the results of his observations, Descartes knew that the only way he could convince the Aristotelians was through meeting their expectations. So, it shouldn’t come as a surprise that it was Descartes’ theory that became accepted on the Continent ca. 1700. The key point is that it was accepted not because it provided confirmed novel predictions, but because it appeared intuitively true to the community of the time. What we need to appreciate is that the method of the time was very different from the hypothetico-deductive method we employ nowadays.

Summary

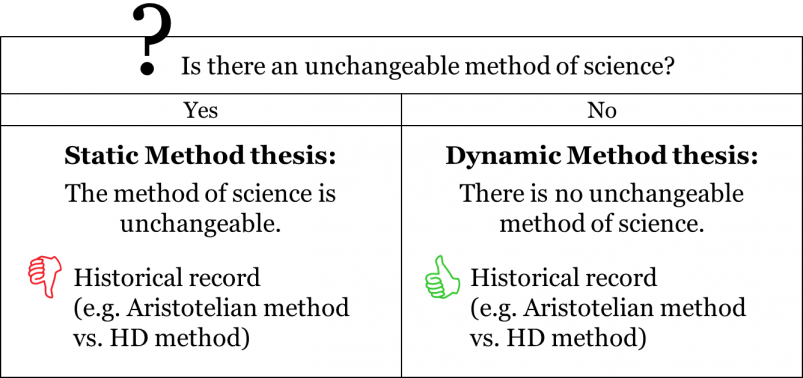

To answer the central question of this chapter: it is accepted nowadays that there is no fixed (unchangeable, transhistorical) method of science. The historical record shows that, when studying the world, we transition not only from one theory to the next, but also, importantly, from one method of theory evaluation to the next. In other words, we nowadays reject the idea of a static method and accept the dynamic method thesis, thus:

For most of the history of knowledge the static method thesis was taken for granted. While Aristotle, Newton, Kant, and Popper would never agree as to what the requirements of the method are, they would all agree that there is one set of requirements that any acceptable theory should meet. The transition to the dynamic method thesis took place ca. 1980 and was mostly due to the pioneering work of Thomas Kuhn and Paul Feyerabend as well as a number of historians of science, who showed that methods of theory evaluation often change as we learn new things about the world. Nowadays, it is commonly accepted that there is no such thing as a fixed method of science; methods change.

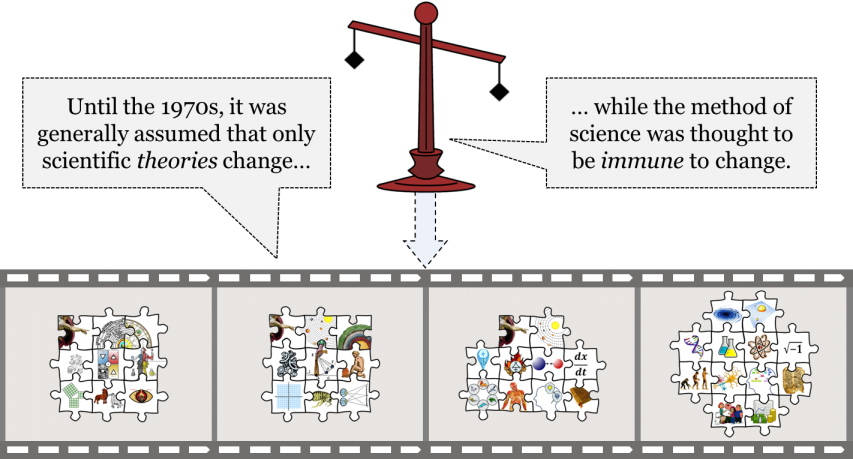

This transition seriously reshaped our views on the process of scientific change. Until about 1980, it was assumed that the process of scientific change concerns only theories, while methods were thought to be external to the process, as if they were guiding the process of theory change from the outside:

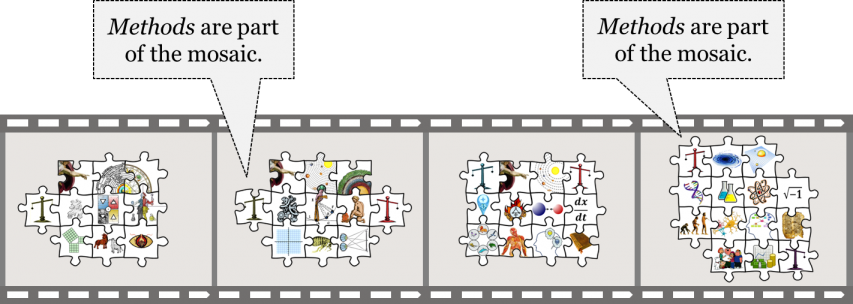

Our current view is different, for we no longer think that methods are external to the process of scientific change. In fact, they are part of the process of scientific change:

But if we understand that methods are part of the process of scientific change, we should redefine our notion of scientific mosaic. We originally defined it as a set of all accepted theories. Now the notion of scientific mosaic should also include all methods employed by the community at a certain time:

Note that just as we accept multiple theories at the same time, it is possible for multiple methods to be employed in the same mosaic. For instance, our method of drug testing will likely be different than our method for evaluating the efficacy of surgical techniques. Similarly, the specific method for evaluating hypotheses concerning subatomic particles need not be the same as the method for evaluating hypotheses concerning the existence of different biological species. In short, a community may have different expectations regarding hypotheses that pertain to different domains.

Thus, we arrive at what is arguably the most challenging question in contemporary philosophy of science. If there are no fixed methods of theory evaluation, does it mean that the process of scientific change is irrational? In other words, why is it that nowadays we employ the hypothetico-deductive method and not, say, the Aristotelian-Medieval method? Is the choice of methods arbitrary or is there a certain mechanism that governs the process of transitions from one employed method to the next? If it turns out that the choice of methods is random – if anyone can freely choose her own method of theory evaluation – then how can we reasonably argue that our contemporary science is better than the science of Aristotle, Descartes, or Newton? If we were to concede that there is no mechanism guiding transitions from one method to the next, then there would be no way of reasonably arguing that one method is better than another and we would end up in what philosophers call relativism. So, is there a mechanism that guides the process of changes in theories and methods alike? We will tackle this question in the next chapter.