Chapter 5: Scientific Progress

Intro

In previous chapters we’ve established that the theories within mosaics change through time. We have also made the case that these changes occur in a regular, law-like fashion. Recognizing that a mosaic’s theories change through time, and understanding how they change, is important. But what makes us think that our empirical theories succeed in describing the world? Yes, we can make a claim that they change in a law-governed fashion, i.e. that theories only become accepted when they meet the expectations of the respective community. But does that mean that our accepted physical, chemical, biological, psychological, sociological, or economic theories actually manage to tell us anything about the processes, entities, and relations they attempt to describe? In other words, we know that the process of scientific change exhibits certain general patterns of change, but does that necessarily mean that this law-governed process of changes in theories and methods actually takes us closer to the true description of the world as it really is?

The position of fallibilism that we have established in chapter 2 suggests that all empirical theories are – at best – approximations. Yet, it doesn’t necessarily follow from this that our empirical theories actually succeed in approximating the world they attempt to describe. To begin this chapter, we will tackle the following question:

Do our best theories correctly describe the mind-independent external world?

Before we go any further, it’s important to keep in mind that we are committed to the philosophical viewpoint of fallibilism. As such, whenever we talk about an empirical theory’s correctness, success, or truth, those theories are always subject to the problems of sensations, induction, and theory-ladenness, and are therefore not absolutely certain, not absolutely true. So, the question is not whether our empirical theories provide absolutely correct descriptions of the world; it is nowadays accepted that they do not. The question is whether we can claim that our best theories get at least something correct, i.e. whether they succeed as approximate descriptions of the world as it really is.

Scientific Realism vs. Instrumentalism

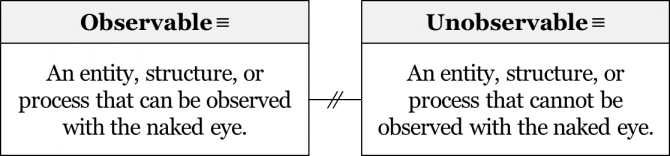

Many theories of empirical science attempt to explain easily observable facts, events, processes, relations, etc. Let’s take the free-fall of a coffee cup as an example. We can easily observe a coffee cup fall from a table to the ground, i.e. the fall itself is an observable process. For instance, we can measure the time it took the coffee cup to hit the ground and formulate a proposition that describes the results of our observation: “it takes 0.5 seconds for the cup to hit the ground”. But what about explaining why the coffee cup falls from the table to the ground? To describe the underlying mechanism which produces the fall, we nowadays cite the theory of general relativity. The explanation provided by general relativity is along these lines: the coffee cup falls to the ground because the Earth’s mass bends the space-time around it in such a way that the inertial motion of the cup is directed towards the ground. While a cup falling through the air is easily observable, the bent space-time which general relativity invokes is not. Similarly, many scientific theories postulate the existence of entities, structures, or processes that are too small (like quarks, atoms, or microbes), too large (like clusters of galaxies), too fast (like the motion of photons), or too slow (like the process of biological evolution by natural selection) to be directly detected with the human senses. In addition, some of the entities, structures, or processes invoked by our scientific theories are such that cannot be directly observed even in principle (like space-time itself). Any process, structure, or entity that can be perceived without any technological help (“with the naked eye”) is typically referred to as observable. In contrast, any process, structure, or entity that can’t be observed with the naked eye is referred to as unobservable.

Since many – if not most – empirical scientific theories invoke unobservables to help explain their objects, this makes our initial question a little bit more interesting. When we ask whether our theories correctly describe the external world, we are actually asking the more specific question:

Do our scientific theories correctly describe both observables and unobservables?

Generally speaking, scientists and philosophers of science today accept that our theories succeed in correctly describing observables, even as fallibilists. When we’ve dropped that coffee cup for the 400th time and still clock its airtime at 0.5 seconds, we’ll consider the claim “it takes 0.5 seconds for the cup to hit the ground” confirmed and correct. But how should we understand claims concerning unobservables, like invoking the notion of bent space-time to explain the fall of the coffee cup? Can we legitimately make any claims about unobservable processes, entities, or relations? Thus, the question of interest here is that concerning unobservables:

Do our scientific theories correctly describe unobservables?

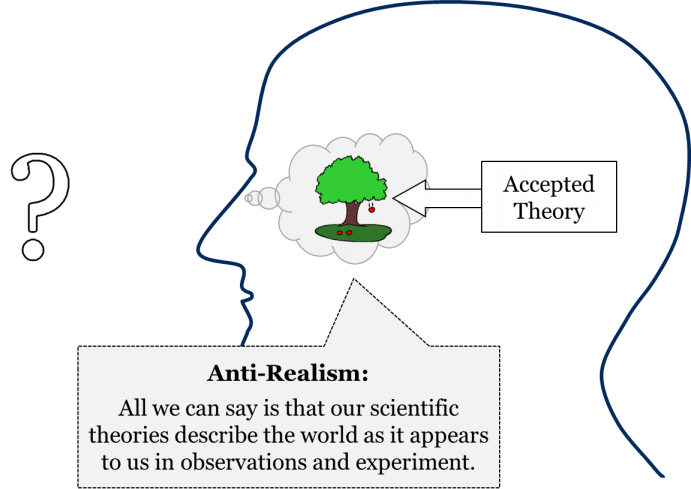

There are many philosophers who are relatively pessimistic when it comes to our ability to make legitimate claims about the unobservable entities, structures, or processes posited by our scientific theories. Those who hold this position would answer “no” to the question of whether scientific theories correctly describe unobservables. This is the position of anti-realism. While anti-realists don’t deny that our theories often correctly describe observables, they do deny that we can make any legitimate claims about the reality of unobservable entities, processes, or relations invoked by our scientific theories. For instance, according to an anti-realist, we are not in a position to say that there is such a thing as bent space-time:

The position of anti-realism is also often called instrumentalism because it treats scientific theories generally – and the parts of those theories that make claims about unobservables specifically – merely as tools or instruments for calculating, predicting, or intervening in the world of observables. Thus, according to instrumentalists, the notion of bent space-time is merely a useful mathematical tool that allows us to calculate and predict how the locations of observable objects change through time; we can’t make any legitimate claims concerning the reality of that bent space-time. In other words, while instrumentalism holds that theories invoking unobservables often yield practical results, those same theories might not actually be succeeding in describing genuine features of the world.

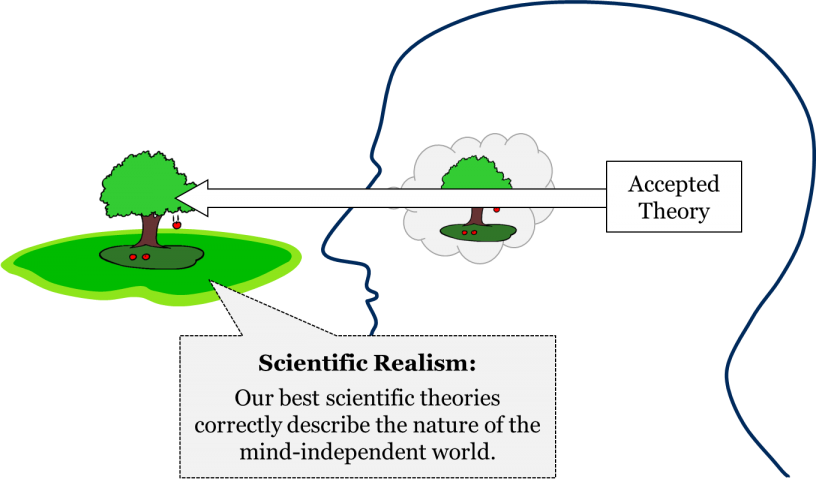

But there are also those who are optimistic when it comes to our ability to know or describe unobservables posited by our scientific theories. This position is called scientific realism. Those who hold this position would answer “yes” to the question of whether scientific theories correctly describe unobservables. For them, unobservables like quarks, bosons, natural selection, and space-time are not merely useful instruments for making predictions of observable phenomena, but denote entities, processes, and relations that likely exist in the world.

It is important to note that the question separating scientific realists from instrumentalists doesn’t concern the existence of the external mind-independent world. The question of whether our world of observable phenomena is all that there is or whether there is an external world beyond what is immediately observable is an important question. It is within the domain of metaphysics, a branch of philosophy concerned with the most general features of the world. However, that metaphysical question doesn’t concern us here. Our question is not about the existence of the external mind-independent world, but about the ability or inability of our best scientific theories to tell us anything about the features of that mind-independent external world. Thus, an instrumentalist doesn’t necessarily deny the existence of a reality beyond the world of observable phenomena. Her claim is different: that even our best scientific theories fail to reveal anything about the world as it really is.

To help differentiate scientific realism from instrumentalism more clearly, let’s use the distinction between acceptance and use we introduced in chapter 3. Recall that it is one thing to accept a theory as the best available description of its respective domain, and it’s another thing to use it in practical applications. While a community can use any number of competing theories in practice (like different tools in a toolbox), it normally accepts only one of the competing theories as the best available description of its object.

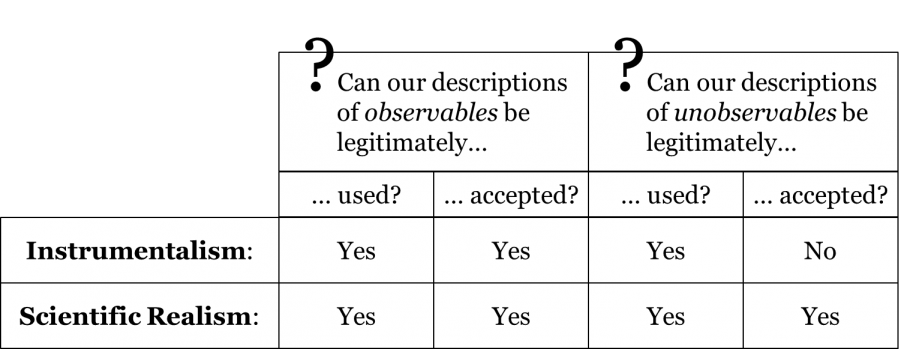

Now, instrumentalists and scientific realists don’t deny that communities do in fact often accept theories and use them in practice; the existence of these epistemic stances in the actual practice of science is beyond question. What separates instrumentalists and scientific realists is the question of the legitimacy of those stances. The question is not whether scientists have historically accepted or used their theories – it is clear that they have – but whether it is justifiable to do so. Since we are dealing with two different stances (acceptance and use) concerning two different types of claims (about observables and unobservables), there are four distinct questions at play here:

Can we legitimately use a theory about observables?

Can we legitimately accept a theory as describing observables?

Can we legitimately use a theory about unobservables?

Can we legitimately accept a theory as describing unobservables?

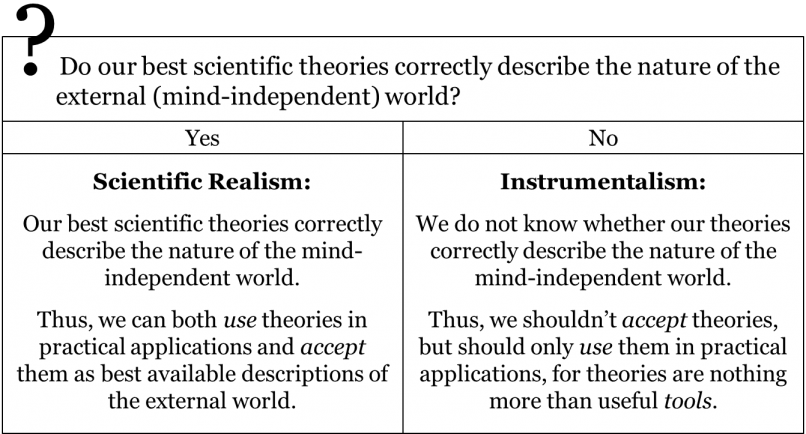

As far as the practical use of theories is concerned, there is no disagreement between instrumentalists and realists: both parties agree that any type of theory can legitimately become useful. This goes both for theories about observable phenomena and theories about unobservables. Instrumentalists and realists also agree that we can legitimately accept theories about observables. Where the two parties differ, however, is in their attitude concerning the legitimacy of accepting theories about unobservables. The question that separates the two parties is whether we can legitimately accept any theories about unobservables. In other words, of the above four questions, realists and instrumentalists only differ in their answer to one of them. This can be summarized in the following table:

For an instrumentalist, theories concerning unobservables can sometimes be legitimately used in practice (e.g. bridge building, telescope construction, policy making) but never legitimately accepted. For a realist, theories concerning unobservables can sometimes be legitimately used, sometimes legitimately accepted, and sometimes both legitimately used and accepted. The following table summarizes the difference between the two conceptions:

Now that we’ve established the basic philosophical difference between instrumentalism and realism, let’s consider some historical examples to help illustrate these contemporary distinctions.

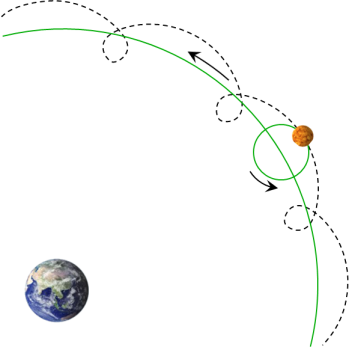

For centuries, astronomers accepted Ptolemy’s model of a geocentric universe, including the Ptolemaic theory of planetary motion. First, recall that for Ptolemy the observable paths of the planets, including their retrograde motion, were produced by a combination of epicycles and deferents. Here is the diagram we considered in chapter 3:

In addition to being accepted, this epicycle-on-deferent theory and its corresponding mathematics was used for centuries to predict the position of the planets with remarkable accuracy. The tables of planetary positions composed by means of that theory (the so-called ephemerides) would then be used in astrology, medicine, navigation, agriculture, etc. (See chapter 7 for more detail.)

Now let’s break this example down a little bit. On the one hand, the planets and their retrograde motion are observables: we don’t need telescopes to observe most of the planets as points of light, and over time we can easily track their paths through the night sky with the naked eye. On the other hand, from the perspective of an observer on the Earth, deferents and epicycles are unobservables, as they cannot be observed with the naked eye. Instead, they are the purported mechanism of planetary motion – the true shape of the orbs which underlie the wandering of the planets across the night sky.

So how would we understand Ptolemy’s epicycle-on-deferent theory of planetary motion from the perspectives of instrumentalism and realism? For both instrumentalists and realists, the Ptolemaic account of the meandering paths of the planets through the night sky would be acceptable since these paths are observable. That is, both conceptions agree that, at the time, the Ptolemaic predictions and projections for planets’ locations in the night sky could be legitimately considered the best description of those phenomena. But instrumentalism and realism disagree over whether it was legitimate for medieval astronomers to also accept those parts of Ptolemy’s theory which referred to unobservable deferents and epicycles. According to realism, at the time, Ptolemy’s theory about epicycles and deferents could be legitimately accepted as the best available description of the actual mechanism of planetary motion. In contrast, the instrumentalist would insist that astronomers had to refrain from committing themselves to the reality of epicycles and deferents, and instead had to focus on whether the notions of epicycle and deferent were useful in calculating planetary positions.

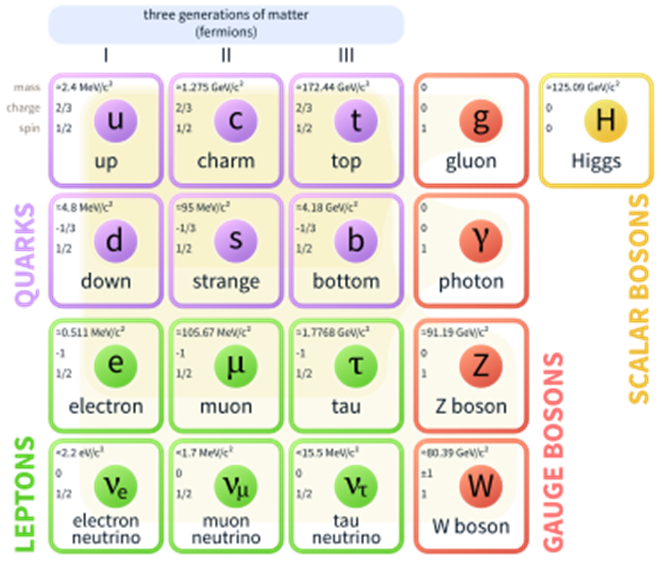

Consider a slightly more recent historical example: the standard model of quantum physics. According to the standard model, there are six quarks, six leptons, four gauge bosons and one scalar boson, the recently discovered Higgs boson. Here is a standard depiction of the standard model:

For our purposes, we can skip the specific roles each type of elementary particle plays in this model. For the purposes of our discussion it is important to note that all of these elementary particles are unobservables; due to their minute size, they cannot be observed with the naked eye. In any event, it is a historical fact that this standard model is currently accepted by physicists as the best available description of its domain.

The philosophical question separating instrumentalists and scientific realists is whether it is legitimate to believe that these particles are more than just a useful calculating tool. Both scientific realists and instrumentalists hold that we can legitimately use the standard model to predict what will be observed in a certain experimental setting given such-and-such initial conditions. However, according to instrumentalists, scientists should not accept the reality of these particles, but should consider them only as useful instruments that allow them to calculate and predict the results of observations and experiments. In contrast, scientific realists would claim that we can legitimately accept the standard model as the best available description of the world of elementary particles, i.e. that the standard model is not a mere predicting tool.

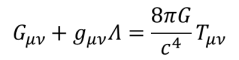

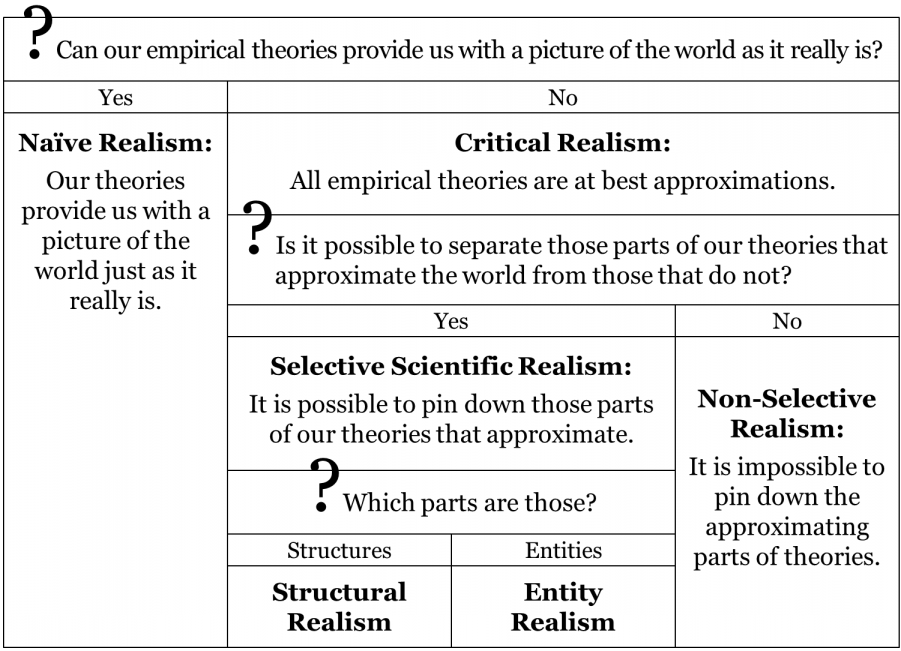

Species of Scientific Realism

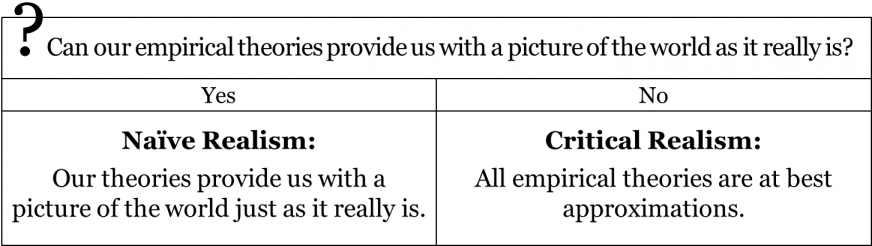

A historical note is in order here. In the good-old days of infallibilism, most philosophers believed that, in one way or another, we manage to obtain absolute knowledge about the mind-independent external world. Naturally, the list of theories that were considered strictly true changed through time. For instance, in the second half of 18th century, philosophers would cite Newtonian physics as an exemplar of infallible knowledge, while the theories of the Aristotelian-medieval mosaic would be considered strictly true in the 15th century. But regardless of which scientific theories were considered absolutely certain, it was generally accepted that such absolute knowledge does exist. In other words, most philosophers accepted that our best scientific theories provide us with a picture of the world just as it really is. This species of scientific realism is known as naïve realism.

However, as we have seen in chapter 2, philosophers have gradually come to appreciate that all empirical theories are, in principle, fallible. Once the transition from infallibilism to fallibilism was completed, the position of naïve realism could no longer be considered viable, i.e. it was no longer possible to argue that our best theories provide us with the exact image of the world as it really is. Instead, the question became:

Do our scientific theories approximate the mind-independent external world (i.e. the world of unobservables)?

Thus, the question that fallibilists ask is not whether our theories succeed in providing an exact picture of the external world – which, as we know, is impossible – but whether our theories at least succeed in approximating the external world. It is this question that nowadays separates scientific realists from instrumentalists. Thus, the version of scientific realism that is available to fallibilists is not naïve realism, but critical realism, which holds that empirical theories can succeed in approximating the world. While critical realists believe that at least some of our best theories manage to provide us with some, albeit fallible, knowledge of the world of unobservables, nowadays there is little agreement among critical realists as to how this approximation is to be understood.

Once the fallibility of our empirical theories became accepted, many critical realists adopted the so-called selective approach. Selective scientific realists attempt to identify those aspects of our fallible theories which can be legitimately accepted as approximately true. On this selective approach, while any empirical theory taken as a whole is strictly speaking false, it may nevertheless have some parts which scientists can legitimately accept as approximately true. Thus, the task of a selective scientific realist is to identify those aspects of our theories which warrant such an epistemic stance. While selective scientific realism has many sub-species, here we will focus on two of the most common varieties – entity realism and structural realism. These two varieties of selective realism differ in their answers to the question which aspects of our theories scientists can legitimately consider approximating reality.

According to entity realism, scientists can be justified in accepting the reality of unobservable entities such as subatomic particles or genes, provided that they are able to manipulate these unobservable entities in such a way as to accurately bring about observable phenomena. For instance, since scientists can accurately predict what exactly will be observed when the putative features of an electron are being manipulated, then they have good reason to accept the reality of that electron. Entity realists hold that it is the scientists’ ability to causally manipulate unobservable entities and produce very precise observable outcomes that justifies their belief that these unobservable entities are real. The key reason why some philosophers find the position of entity realism appealing is that it allows one to continue legitimately accepting the reality of an entity despite of any changes in our knowledge concerning the behaviour of that entity. For example, according to entity realists, we can continue accepting the reality of an electron regardless of any additional knowledge concerning specific features of the electron and its behaviour that we may acquire in the future. Importantly, entity realism takes a selective approach as to which parts of our theories can be legitimately accepted and which parts can only be considered useful.

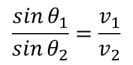

A different version of the selective approach is offered by structural realism. While structural realists agree with entity realists that scientists can legitimately accept only certain parts of the best scientific theories, they differ drastically in their take on which parts scientists are justified in accepting. According to structural realists, scientists can justifiably accept not the descriptions of unobservable entities provided by our best theories, but only the claims these theories make about unobservable structures, i.e. about certain relations that exist in the world. One key motivation for this view is a historical observation that often our knowledge of certain structural relations is being preserved despite fundamental changes in our views concerning the types of entities that populate the world. Consider, for instance, the law of refraction from optics:

The ratio of the sines of the angles of incidence θ1 and angle of refraction θ2 is equivalent to the ratio of phase velocities (v1 / v2) in the two media:

Setting aside the question of who is to be rightfully credited with the discovery of this law – René Descartes, or the Dutch astronomer Willebrord Snellius, or even the Persian scientist Ibn Sahl – we can safely say that all theories of light accepted since the 17th century contained a version of this law. This goes for Descartes’ mechanistic optics, Newton’s optics, Fresnel’s wave theory of light, Maxwell’s electrodynamics, as well as contemporary quantum optics. These different optical theories had drastically opposing views on the nature of light: some of these theories understood light as a string of corpuscles (particles), while other theories treated light as a wave-like entity, while yet others considered light as both corpuscular and wave-like. But despite these drastic changes in our understanding of the nature of light, the law of refraction has maintained its state in our mosaic. Thus, according to structural realists, scientists are justified in accepting those claims of our theories which reveal certain underlying structures (relations), while the claims about unobservable entities are to be taken sceptically. In this view, scientists can still find the claims regarding unobservable entities useful, but they are not justified in accepting those claims. All they can legitimately do is to believe that their theories provide acceptable approximations of the underlying structures that produce observable phenomena. This is another example of selective scientific realism.

Upon closer scrutiny, however, both entity realism and structural realism fail to square with the history of science. Let us begin with entity realism, according to which, we are justified in accepting the existence of those unobservable entities which we manage to manipulate. This view assumes that there are claims about certain unobservable entities that maintain their positions in the mosaic despite all the changes in the claims concerning their behaviour and relations with other entities. Unfortunately, a quick glance at the history of science reveals many once-accepted unobservable entities that are no longer accepted. Consider, for instance, the theory of phlogiston accepted by chemists until the late 18th century. According to this theory, what makes something combustible is the presence of a certain substance, called phlogiston. Thus, firewood burns because it contains phlogiston. When burning, so the story goes, the firewood is being de-phlogisticated while the air around it becomes phlogisticated. In other words, according to this theory, phlogiston moves from the burning substance to the air. What’s important for our discussion is that the existence of phlogiston, an unobservable entity, was accepted by the community of the time. Needless to say, we no longer accept the existence of phlogiston. In fact, the description of combustion we accept nowadays is drastically different. Presently, chemists accept that, in combustion, a substance composed primarily of carbon, hydrogen, and oxygen (the firewood) combines with the oxygen in the air, producing carbon dioxide and water vapor. In short, the chemical entities that we accept nowadays are very different from those that we used to accept in the 18th century. When we study the history of science, we easily find many cases when a once-accepted entity is no longer accepted. Consider, for example, the four Aristotelian elements, the electric fluids of the 18th century, or the luminiferous ether of the 19th century. How then can entity realists argue that scientists are justified in accepting the existence of unobservable entities if our knowledge of these entities is as changeable as our knowledge of structures?

Of course, an entity realist can respond by saying that those entities that we no longer accept were accepted by mistake, i.e. that scientists didn’t really manage to causally manipulate them. But such a response is historically-insensitive, as it assumes that only our contemporary science succeeds in properly manipulating the unobservable entities, while the scientists of the past were mistaken in their beliefs that they managed to manipulate their unobservable entities. In reality, however, such “mistakes” only become apparent when a theory becomes rejected and replaced by a new theory that posits new unobservable entities. Chances are, one day our current unobservable entities will also be replaced by some new unobservables, as has happened repeatedly throughout history. Would entity realists be prepared to admit that our contemporary scientists weren’t really justified in accepting our current unobservable entities, such as quarks, leptons, or bosons, once these entities become replaced by other unobservable entities? Clearly, that would defeat the whole purpose of entity realism, which was to select those parts of our theories that successfully approximate the world.

Structural realism faces a similar objection. Yes, it is true that sometimes our claims concerning structural relations withstand major transitions from one set of unobservable entities to another. However, this is by no means a universal feature of science. We can think of many instances from the history of science where a long-accepted proposition describing a certain relation eventually becomes rejected. Consider the Aristotelian law of violent motion that was accepted throughout the medieval and early modern periods:

If the force (F) is greater than the resistance (R) then the object will move with a velocity (V) proportional to F/R. Otherwise the object won’t move.

Among other things, the law was accepted as the correct explanation of the motion of projectiles, such as that of an arrow shot by an archer. The velocity of the moving arrow was believed to depend on two factors: the force applied by the archer and the resistance of the medium, i.e. the air. The greater the applied force, the greater the velocity; the greater the resistance of the medium, the smaller the velocity. It was accepted that the initial force was due to the mover, i.e. the archer. But what type of force keeps the object, i.e. the arrow, moving after it has lost contact with the initial mover? Generations of medieval and early modern scholars have attempted to answer this question. Yet, it was accepted that any motion – including continued motion – necessarily requires a certain force, be it some external force or internal force stemming from the moving object itself.

Now compare this with the second law of Newtonian physics:

The acceleration (a) of a body is proportional to the net force (F) acting on the body and is inversely proportional to the mass (m) of the body:

How would we parse out the same archer-arrow case by means of this law? First, we notice that the mass of the arrow suddenly becomes important. We also notice that the force is now understood as the net force and it is proportional not to the velocity but to the acceleration, i.e. the change of velocity per unit time. According to the law, the greater the net force, the greater the acceleration, and the greater the mass, the smaller the acceleration. In short, the second law expresses relations that are quite different from those expressed by the Aristotelian law of violent motion. So how can a structural realist claim that our knowledge of relations is normally being preserved in one form or another?

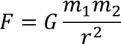

There have been other historical cases where we accepted the existence of new relations and rejected the existence of previously accepted relations. Consider, for example, the transition from Newton’s law of gravity to Einstein’s field equations which took place as a result of the acceptance of general relativity ca. 1920. Here is a typical formulation of the law of gravity:

And here is a typical formulation of Einstein’s field equation:

The two equations are not only very different visually, but they also capture very different relations. The Newtonian law of gravity posits a certain relation between the masses of two objects, the distance between them, and the force of gravity with which they attract each other. In contrast, Einstein’s field equation posits a relation between the space-time curvature expressed by the Einstein tensor (Gμν) and a specific distribution of matter and energy expressed by the stress-energy tensor (Tμν). While Newton’s law tells us what the force of gravity will be given objects with certain masses at a certain distance, Einstein’s equation tells us how a certain region of space-time will be curved given a certain arrangement of mass and energy in that region. Saying that the two equations somehow capture the same relation would be an unacceptable stretch.

In short, there are strong historical reasons against both entity realism and structural realism. Both of these versions of selective scientific realism fail to square with the history of science, which shows clearly that both our knowledge of entities and our knowledge of structures have repeatedly changed through time. This is not surprising, since as fallibilists we know that no synthetic proposition is immune to change. Clearly, there is no reason why some of these synthetic propositions – either the ones describing entities or the ones describing relations – should be any different.

In addition, there is a strong theoretical reason against selective scientific realism. It is safe to say that both entity realism and structural realism fail in their attempts due to a fatal flaw implicit in any selective approach. The goal of any selective approach is to provide some criteria – i.e. some method – that would help us separate those parts of our theories that approximate the world from those parts that are at best mere useful instruments. We’ve seen how both entity realism and structural realism attempted to provide their distinct methods for distinguishing acceptable parts of theories from those that are merely useful. The entity realist method of selecting acceptable parts would go along these lines: “the existence of an unobservable entity is acceptable if that entity has been successfully manipulated”. Conversely, the structural realist method can be formulated as “the claim about unobservables is acceptable if it concerns a relation (structure)”. Importantly, these methods were meant to be both universal and transhistorical, i.e. applicable to all fields of science in all historical periods. After our discussions in chapters 3 and 4, it should be clear why any such attempt at identifying transhistorical and universal methods is doomed. We know that methods of science are changeable: what is acceptable to one community at one historical period need not necessarily be acceptable to another community at another historical period. Even the same community can, with time, change its attitude towards a certain relation or entity.

Take, for instance, the idea of quantum entanglement. According to quantum mechanics, sometimes several particles interact in such a way that the state of an individual particle is dependent on the state of the other particles. In such cases, the particles are said to be entangled: the current state of an entangled particle cannot be characterized independently of the other entangled particles. Instead, the state of the whole entangled system is to be characterized collectively. If, for instance, we have a pair of entangled electrons, then the measurement of one electron’s quantum state (e.g. spin, polarization, momentum, position) has an impact on the quantum state of the other electron. Importantly, according to quantum mechanics, entanglement can be nonlocal: particles can be entangled even when they are separated by great distances.

Needless to say, the existence of nonlocal entanglement didn’t become immediately accepted, for it seemingly violated one of the key principles of Einstein’s relativity theory, according to which nothing can move and no information can be transmitted faster than the speed of light. Specifically, it wasn’t clear how a manipulation on one of the entangled particles can possibly affect the state of the other particle far away without transmitting this information faster than the speed of light. This was one of the reasons why the likes of Albert Einstein and Erwin Schrödinger were against accepting the notion of nonlocal entanglement. Thus, for a long time, the idea of entanglement was considered a useful calculating tool. But the reality of entanglement was challenged, i.e. it wasn’t accepted as a real physical phenomenon. It was not until Alain Aspect’s experiments of 1982 that the existence of nonlocal entanglement became accepted. Nowadays, it is accepted by the physics community that subatomic particles can be entangled over large distances.

What this example shows is that the stance towards a certain entity or a relation can change even within a single community. A community may at first be instrumentalist towards an entity or a structure, like quantum entanglement, and then may later become realist about the same entity or structure. By the second law of scientific change, the acceptance or unacceptance of a claim about an entity or a relation by a certain community depends on the respective method employed by that community. Thus, to assume that we as philosophers are in a position to provide transhistorical and universal criteria for evaluating what’s acceptable and what’s merely useful would be not only anachronistic and presumptuous but would also go against the laws of scientific change. Therefore, we have to refrain from drawing any such transhistorical and universal lines between what is acceptable and what is merely useful. In other words, the approach of selective scientific realism is untenable.

This brings us to the position that can be called nonselective scientific realism. According to this view, our best scientific theories do somehow approximate the world and we are justified to accept them as the best available descriptions of their respective domains, but we can never tell which specific parts of our theories are acceptable approximations and which parts are not. Nonselective scientific realism holds that any attempt at differentiating acceptable parts of our theories from merely useful parts is doomed, since all synthetic propositions – both those describing entities and those describing relations/structures – are inevitably fallible and can be replaced in the future.

The following table summarizes the major varieties of scientific realism:

From Realism to Progress

Once we appreciate that the selective approach is not viable, we also understand that we can no longer attempt to draw transhistorical and universal lines between legitimate approximations and mere useful instruments. This is decided by an individual community’s employed method which can change through time. Thus, the question that a realist wants to address is not whether our theories approximate the world – they somehow do – but whether new accepted theories provide better approximations than the previously accepted theories. Thus, the question is that of progress:

Does science actually progress towards truth?

Note that, while there have been many different notions of progress, here we are interested exclusively in progress towards the correct description of the mind-independent external world. Indeed, nobody really questions the existence of technological, i.e. instrumental progress. It is a historical fact that our ability to manipulate different phenomena has constantly increased. After all, we can now produce self-driving cars, smartphones, and fidget spinners – something we weren’t able to do not long ago. In other words, the existence of technological (instrumental, empirical) progress is beyond question. What’s at stake here is whether we also progress in our descriptions of the world as it really is. This is worth explaining.

The question of whether scientific theories progress towards truth is important in many respects. Science, after all, seems to be one of very few fields of human endeavour where we can legitimately speak of progress. The very reason why we have grant agencies funding scientific research is that we assume that our new theories can improve our understanding of the world. The belief that contemporary scientific theories are much better approximations of the world than the theories of the past seems to be implicit in the whole scientific enterprise. In short, the belief that currently accepted theories are better than those of the past is integral to our culture. This is more than can be said about many other fields of human endeavour. For example, can anyone really show that contemporary rock music is better than that of the 1970s and the 1980s? How could we even begin to compare the two? Are contemporary authors better than Jane Austen, Leo Tolstoy, or Marcel Proust? Is contemporary visual art better than that of Da Vinci or Rembrandt? It is nowadays accepted that art manages to produce different forms, i.e. different ways of approaching the subject, but the very notion that one of these forms can be better than others is considered problematic. Yet, when it comes to science, our common attitude these days is that it steadily progresses towards an increasingly better approximation of the world. Our question here is to determine whether that is really the case. What we want to establish is whether it is the case that science gradually approximates the true description of the world, or whether we should concede that science can at best give us different ways of looking at the world, none of which is better or worse.

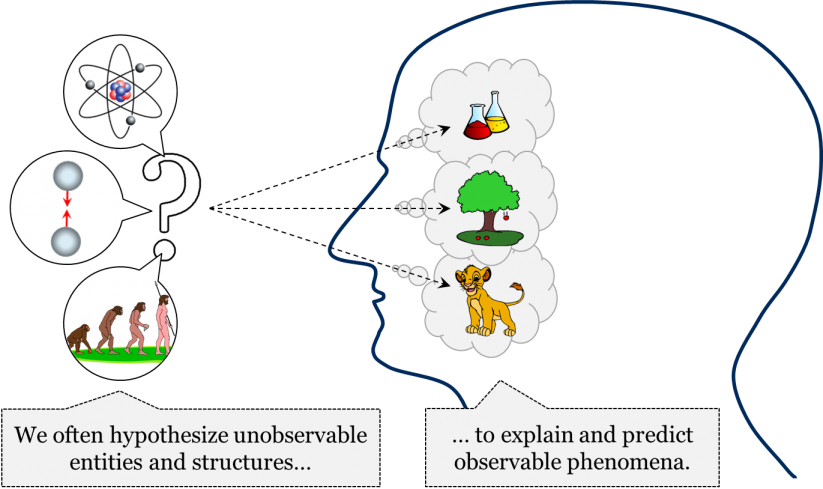

In science, we often postulate different hypotheses about unobservable entities or relations/structures so that we could explain observable phenomena. For instance, we hypothesized the existence of a certain attractive force that was meant to explain why apples fall down and why planets revolve in ellipses. Similarly, we hypothesized the existence of a certain atomic structure which allowed us to explain many observable chemical phenomena. We hypothesized the existence of evolution through mutation and natural selection to explain the observable features of biological species. In short, we customarily hypothesize and accept different unobservable entities and structures to explain the world of phenomena, i.e. to explain the world as it appears to us in experiments and observations.

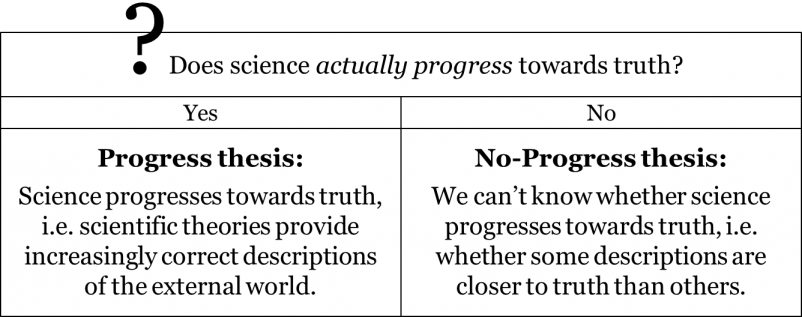

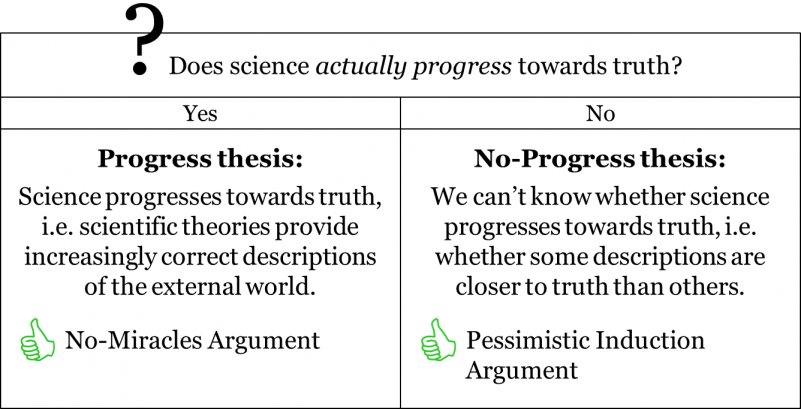

In addition, as we have already seen, sometimes scientists change their views on what types of entities and structures populate the world: the entities and relations that were accepted a hundred years ago may or may not still be accepted nowadays. But if our views on unobservable entities and structures that purportedly populate the world change through time, can we really say that we are getting better in our knowledge of the world as it really is? There are two opposing views on this question – the progress thesis and the no-progress thesis. While the champions of the progress thesis believe that science gradually advances in its approximations of the world, the proponents of the no-progress thesis hold that we are not in a position to know whether our scientific theories progress towards truth.

How can we find out which of these opposing parties is right? Naturally, we might be inclined to refer to the laws of scientific change and see what they have to tell us on the subject. Yet, a quick analysis reveals that the laws of scientific change as they currently stand do not really shed light on the issue of scientific progress. Indeed, consider the second law, according to which theories normally become accepted by a community when they somehow meet the acceptance criteria of the community’s employed method. Now recall the definition of acceptance: to accept a theory means to consider it the best available description of whatever it is the theory attempts to describe. Thus, if we ask any community, they will say that their current theories are better approximation of their objects than the theories they accepted in the past. After all, we wouldn’t accept general relativity if we didn’t think that it is better than the Newtonian theory. Any community whatsoever will always believe that they have been getting better in their knowledge of the world. While some communities may also believe that they have already achieved the absolutely true description of a certain object, even these communities will accept that their current theories are better than the theories of the past. In other words, when we look at the process of scientific change from the perspective of any community, the historical sequence of their accepted theories will always appear progressive to them.

Clearly, this approach doesn’t take us too far, since the question wasn’t whether the process of scientific change appears progressive from the perspective of the scientific community, but whether the process is actually progressive. The laws of scientific change tell us that if we were to ask any scientific community, they would subscribe to the notion of progress. Yet, to find out whether we do, in fact, progress towards truth, we need a different approach. In the remainder of this chapter, we will consider the two most famous arguments for and against the progress thesis – the no-miracles argument and the pessimistic meta-induction argument.

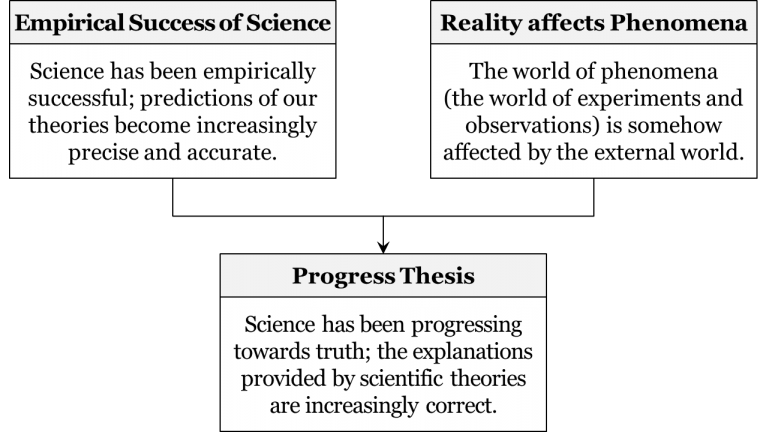

As we have already established, nobody denies that science becomes increasingly successful in its ability to manipulate and predict observable phenomena. It is this empirical success of our theories that allows us to predict future events, construct all sorts of useful instruments, and drastically change the world around us. In its ability to accurately predict and manipulate observable phenomena, our 21st-century science is undeniably head and shoulders above the science of the past. But does this increasing empirical success of our theories mean that we are also getting closer to the true picture of the world as it really is? This is where the progress thesis and no-progress thesis drastically differ.

According to the champions of the progress-thesis, the empirical success of our theories is a result of our ever-improving understanding of the world as it really is. This is because the world of phenomena cannot be altogether divorced from the external world. After all, so the argument goes, the world of phenomena is an effect of the external world upon our senses: what we see, hear, smell, taste, and touch should be somehow connected to how the world really is. Of course, nobody will claim that the world as it is in reality is exactly the way we perceive it – i.e. nobody will champion the view of naïve realism these days – but isn’t it reasonable to suggest that what we perceive depends on the nature of the external world at least to some degree? Thus, the results of experiments and observations are at least partially affected by things as they really are. But this means that by getting better in our ability to deal with the world of observable phenomena, we are also gradually improving our knowledge of the world of unobservables. In other words, as the overall predictive power of our theories increases, this is, generally speaking, a good indication that our understanding of the world itself also improves. Here is the argument:

The underlying idea is quite simple: if our theories didn’t manage to get at least something right about the world as it really is, then the empirical success of our science would simply be a miracle. Indeed, how else could we explain the unparalleled empirical success of our science if not by the fact that it becomes better and better in its approximations of the external world? Surely, if we could manage to have this much empirical success without ever getting anything right about the external world, that would be a miracle. The only reasonable explanation, say the champions of the progress thesis, is that our approximations of the world also improve, i.e. that we gradually progress towards truth. This is the gist of the famous no-miracles argument for scientific progress.

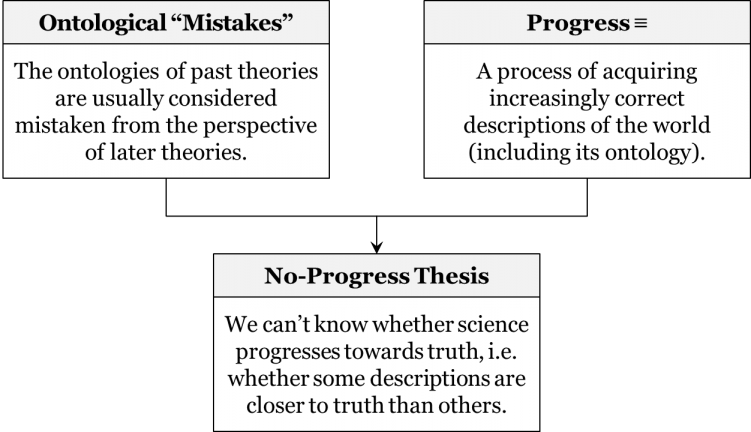

How would a champion of the no-progress thesis reply to this? One common reply is the so-called pessimistic meta-induction argument. Let us first appreciate, says a champion of the no-progress thesis, a simple historical fact: we have been quite often mistaken in our hypotheses concerning unobservable entities or structures. When we try to hypothesize what unobservable entities or structures populate the world, i.e. when we try to guess the ontology of the world, we often end up accepting entities and structures which we eventually come to reject as wrong. The history of science, so the argument goes, provides several great examples of this. Consider the idea of the four terrestrial elements of earth, water, air, and fire which were an integral part of any Aristotelian-medieval mosaic all the way into the 17th century. Similarly, recall the idea of phlogiston accepted in the 18th century. Also recall the idea of the force of gravity acting at a distance between any two objects in the universe, which was accepted until ca. 1920. It is a historical fact, say the proponents of the no-progress thesis, that our knowledge about entities and structures that populate the world has changed through time. We are often wrong in our hypotheses concerning the ontology of the world. As a result, we often reject old ontologies and accept new ones. But if the ontologies of our past theories are normally considered mistaken from later perspectives, how can we ever claim that our theories gradually get better in approximating the world? Shouldn’t we rather be more modest and say that all we know is that we are often mistaken in our hypotheses concerning the unobservable entities and structures, that we often come to reject long-accepted ontologies, and that the ontologies of our current theories could be found, one day, to be equally mistaken? In other words, we should accept that there is no guarantee that our theories improve as approximations of the world as it really is; we are not in a position to claim that science provides increasingly correct descriptions of the world. But that is precisely what the idea of progress is all about! Thus, there is no progress in science. Here is the gist of the argument:

If the long-accepted ontology of four terrestrial elements was eventually considered mistaken, then why should our ontology of quarks, leptons, and bosons be any different? The unobservable entities and structures that our theories postulate come and go, which means that in the future the ontologies of our current theories will most likely be considered mistaken from the perspective of the theories that will come to replace them. This is the substance of what is known as the pessimistic meta-induction argument.

Now, why such a strange label – “pessimistic meta-induction”? In literature, the argument is often portrayed as inductive: because the ontologies of our past theories have been repeatedly rejected, we generalize and predict that the ontologies of the currently accepted theories will also one day be rejected. This is a meta-inductive step, as it concerns not our descriptions of the world, but our descriptions concerning our descriptions, i.e. our meta-theories (e.g. the claim that “ontologies of past theories have been rejected”). As the whole argument questions the ability of our current ontologies to withstand future challenges, it is also clearly pessimistic. It is important to note that while the inductive form of the argument is very popular in the philosophical literature, it can also be formulated as a deductive argument, as we have done above. The main reason why we nowadays believe that our claims about unobservable entities and structures can be rejected in the future is our fallibilism, for which we have several theoretical reasons, such as the problem of sensations, the problem of induction, and the problem of theory-ladenness. Since we accept that all our empirical theories are fallible, we don’t make any exceptions for our claims concerning the ontology of the world – and why should we? So, even though the argument is traditionally labelled as “pessimistic meta-induction”, it can be formulated in such a way as to avoid any direct use of induction. We don’t have to even mention the failure of our past ontologies; our fallibilism alone is sufficient to claim that our current ontologies are also likely doomed.

Regardless of how the argument is formulated, its main message remains the same: we are not in a position to say there is actual progress towards truth. Since the ontologies of past theories are usually considered mistaken from the perspective of future theories, the process of scientific change produces one faulty ontology of unobservable entities and structures after another. What we end up with is essentially a graveyard of rejected ontologies – a series of transitions where one false ontology replaces another false ontology and so on. All that science gives us is different perspectives, different ways of approaching the world, which can be more or less empirically successful, yet none of these can be said to be approximating the world better than others. Thus, all that we can legitimately claim, according to the no-progress thesis, is that science increases its overall predictive power but doesn’t take us closer to the truth.

Does this argument hold water? As opposed to the no-miracles argument, the pessimistic meta-induction argument divorces the empirical success of a theory from its ability to successfully approximate the world. Indeed, the fact that a theory is predictively accurate and can be used in practical applications doesn’t necessarily make its ontology any more truthlike. Consider, for instance, the Ptolemaic geocentric astronomy which was extremely successful in its predictions of planetary positions, but postulated an ontology of eccentrics, equants, epicycles, and deferents, which was considered dubious even in the Middle Ages. In addition, the history of science provides many examples in which several theories, with completely different ontologies, were equally successful in their predictions of observable phenomena. Thus, in the early 17th century, the Copernican heliocentric theory and the Tychonic geo-heliocentric theory posited distinct ontologies but were almost indistinguishable in their predictions of observable planetary positions. Nowadays, we have a number of different quantum theories – the so-called “interpretations” of quantum mechanics – which make exactly the same predictions but postulate very different ontologies. If theories with completely different ontologies manage to be equally successful in their predictions, then how can we even choose which of these distinct ontologies to accept, let alone argue that one of them is a better approximation of the world? The champions of the no-progress thesis do a great job highlighting this discrepancy between empirical successes and approximating the world. In other words, they point out that, from the mere fact that the world of phenomena is affected by reality, it does not follow that by improving our knowledge of phenomena, we simultaneously improve our knowledge of the world as it really is. Thus, they question the validity of the no-miracles argument.

However, the pessimistic meta-induction argument has a fatal flaw, for it is based on the premise that our past ontologies are considered mistaken from the perspective of future theories. If we are truly fallibilists, then we should be very careful when deeming ontologies of the past as false in the absolute sense. Instead, we should accept that they are not absolutely false, but contain at least some grains of truth, i.e. that they somehow approximate the world, albeit imperfectly. For instance, we eventually came to reject the ontology of four elements, but we don’t think it was absolutely false. Instead, we think it contained some grains of truth, as it clearly resembles the contemporary idea of the four states of matter: solid, liquid, gas, and plasma. Similarly, we no longer accept the theory of phlogiston, but saying that its ontology was absolutely wrong would be a stretch; it was an approximation – a pretty bad one to be sure, but an approximation nevertheless. The fallibilist position is not that the old ontologies are strictly false, but that the ontology of general relativity and quantum physics is slightly better than the ontology of classical physics, just as the ontology of classical physics was slightly better than the ontology of Descartes’ natural philosophy, which itself was slightly better than that of Aristotle. Thus, we can’t say we commit ontological mistakes in the absolute sense. The old ontologies are rejected not because they are considered absolutely false, but because we think we have something better. A useful way of thinking of it is as a series of photographs of the same person – from the blurriest to the sharpest. Compared to the sharp photographs, the blurry photographs would be worse approximations of the person’s appearance; yet, importantly, they are all approximations. In short, the premise of ontological “mistakes” doesn’t hold water, and thus the whole argument is unsound.

Summary

We commenced this chapter by posing the question of scientific realism: do our best theories correctly describe the mind-independent external world? We have learned that the central point of contention between realists and instrumentalists doesn’t concern our ability to describe what is immediately observable, but our ability to provide trustworthy descriptions of unobservable entities and structures. While both parties agree that theories can be legitimately used in practical applications, scientific realists also believe that we can legitimately accept the claims of our theories about unobservables. We have discussed a number of sub-species of scientific realism. We’ve also seen how selective approaches fail in their attempts to differentiate acceptable parts of our theories from those that are merely useful. Our knowledge about both unobservable entities and unobservable structures changes through time and there is no transhistorical and universal method that would indicate which parts of our theories are to be accepted and which only used. Only the actual methods employed by a given community at a given time can answer that question.

We then suggested that there is a more interesting question to discuss – that of scientific progress. While it is generally agreed that our scientific theories have been enormously successful in dealing with observable phenomena, there is a heated debate on whether scientific theories gradually progress towards ever-improving approximations of the world. We’ve seen that the main argument for the no-progress thesis – the pessimistic meta-induction argument – has a serious flaw. The debate can be summed up in the following table:

While we can tentatively say that the progress thesis seems slightly better supported, we have to be cautious not to jump to the conclusion that the debate is over. Far from it: the question of progress is central to the contemporary philosophy of science and is a subject of continuous discussions.